March 2 BOOK RELEASE PRESALE FOR IMPOSSIBLY HARD: WHAT'S DRIVING HIGH TURNOVER IN TECH? click here!

The Hidden Cost of "AI-Powered": Why Ethical AI Starts With Open Source

The AI vendors aren't going to tell you this part.

11/14/20255 min read

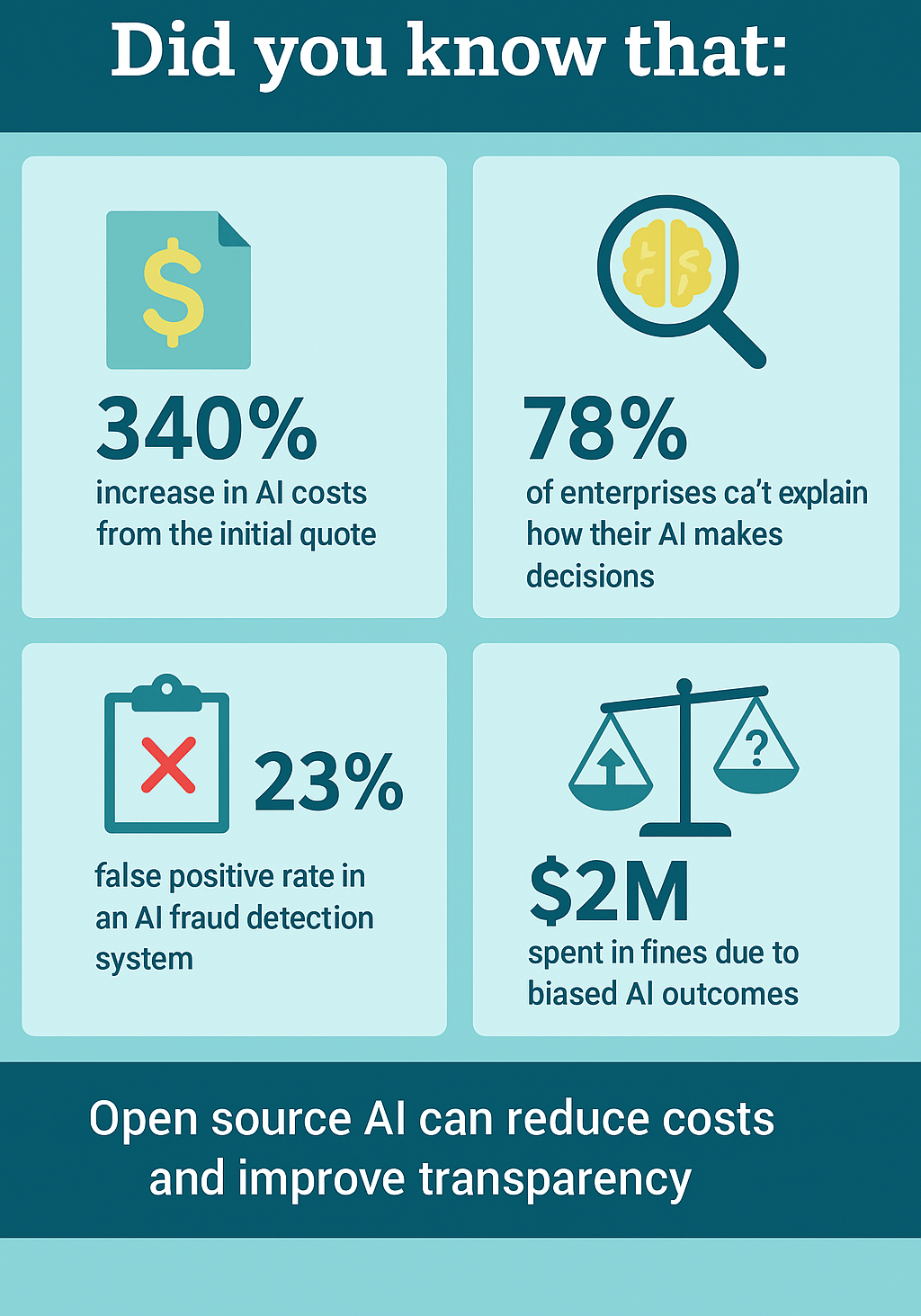

Last quarter, I sat across from a CFO who'd just discovered their company's AI spending had ballooned to $847,000 annually—a 340% increase from the initial quote. Worse, when they asked their vendor to explain why their fraud detection system was flagging legitimate customers at a 23% false positive rate, the response was essentially: "Our proprietary algorithm is too complex to explain."

That's not innovation. That's liability wrapped in marketing language.

The Transparency Crisis Nobody's Talking About

The AI gold rush has created a dangerous pattern: companies are embedding decision-making systems they fundamentally don't understand into their most critical business processes.

According to a 2024 Gartner study, 78% of enterprises using third-party AI services cannot explain how their AI systems make decisions. Yet these same systems are:

Screening job candidates

Approving or denying loans

Setting insurance premiums

Determining credit limits

Pricing products dynamically

Flagging fraud (and legitimate customers)

The question isn't whether your AI works. The question is: can you prove it works fairly, legally, and consistently when regulators or customers demand answers?

For most companies using proprietary AI, the honest answer is no.

What "Proprietary AI" Actually Means

When vendors tout their "proprietary AI algorithms," here's what they're actually selling you:

Zero algorithmic visibility. You can't see how decisions are made. You're told to trust the black box.

Data rights you didn't negotiate. Buried in those 47-page terms of service? Clauses giving vendors perpetual rights to train models on your customer data, your transaction patterns, your competitive intelligence.

No liability for bad outcomes. Read the indemnification clauses. When their AI makes a discriminatory decision or costly error, you're holding the bag.

Pricing opacity. Usage-based pricing that scales unpredictably. I've seen bills jump 400% in six months with zero changes to usage patterns—just "model improvements" that required more compute.

Lock-in by design. Switching costs that make leaving economically irrational, even when performance degrades or prices skyrocket.

This isn't hypothetical. In 2023, a major retail bank paid $32 million in regulatory fines because their vendor's AI lending algorithm exhibited racial bias—bias they couldn't detect because they couldn't audit the model.

The Business Case for Open Source AI

Let's be clear: this isn't about ideology. This is about risk management and financial efficiency.

Cost Control That Actually Works

Open source AI models eliminate the ransom pricing endemic to proprietary vendors:

Infrastructure costs drop 60-80%. Run models on your own infrastructure instead of paying per-API-call markup.

No surprise bills. You control compute resources and can optimize costs based on actual business value.

Negotiating leverage. Even if you use managed services, open source gives you credible alternatives that prevent vendor lock-in pricing.

One fintech client reduced their AI infrastructure spend from $1.2M annually to $310K by migrating from a proprietary NLP vendor to open source models they tuned in-house. Same performance. One-quarter the cost.

Algorithmic Sovereignty = Risk Mitigation

When you can inspect, audit, and modify the models making business-critical decisions, you gain:

Regulatory compliance you can document. The EU AI Act, proposed US algorithmic accountability laws, and financial services regulations increasingly require explainable AI. Open source models can be audited. Proprietary ones cannot.

Bias detection and correction. You can test for disparate impact across protected classes. With proprietary models, you're trusting the vendor caught it—and hoping they tell you if they didn't.

Customization for your context. A model trained on generic internet data makes different decisions than one trained on your specific industry, customer base, and business rules. Open source lets you close that gap.

Business continuity. Your AI strategy isn't hostage to vendor acquisitions, pivots, or sudden EOL announcements.

The Innovation Paradox

Here's what surprises most executives: open source AI often outperforms proprietary alternatives.

Why? Because the best researchers publish their models openly. LLaMA 3.1, Mistral, Falcon—these aren't hobby projects. They're state-of-the-art models developed by top AI labs and improved by thousands of contributors.

The proprietary advantage has narrowed to near-zero for most business applications. What you're paying for isn't better AI—it's convenience and brand recognition.

What "Ethical AI" Actually Requires

Ethical AI isn't about noble intentions or corporate values statements. It's about operational practices:

1. Explainability. Can you articulate why the AI made a specific decision? Can you defend that reasoning to a regulator or in court?

2. Auditability. Can you test the model for bias, errors, or drift? Can third parties verify your claims?

3. Accountability. When something goes wrong, can you fix it—or are you waiting on a vendor's roadmap?

4. Data sovereignty. Do you control how your data is used to train and improve models?

5. Transparency. Can employees and customers understand the AI's role in decisions that affect them?

Proprietary AI vendors check maybe one of these boxes. Open source enables all five.

The Questions Your Board Should Be Asking

If you're deploying AI in your organization, these questions separate due diligence from expensive mistakes:

On vendor AI:

What data are we giving them rights to use, and for how long?

How do they test for algorithmic bias, and can we see the results?

What's our liability exposure if their AI makes a discriminatory or costly error?

What are our actual switching costs if they 3x pricing or get acquired?

Can we export our training data and model weights if we leave?

On open source alternatives:

What infrastructure and talent would we need to run comparable models in-house?

What's our total cost of ownership over 3 years versus vendor pricing trajectories?

Which models are mature enough for our use case, and what's the support ecosystem?

What's our risk if a critical model loses community support?

The Migration Path Forward

You don't flip a switch from proprietary to open source AI. But you can build optionality:

Phase 1: Assessment (30 days) Map your current AI spend, use cases, and vendor dependencies. Identify where you're most exposed to pricing risk or algorithmic opacity.

Phase 2: Proof of Concept (60 days)

Pick one non-critical AI application. Deploy an open source alternative in parallel. Measure performance, cost, and operational overhead.

Phase 3: Strategic Migration (6-18 months) For systems where open source proved viable, build migration roadmaps. Renegotiate vendor contracts with credible alternatives in hand.

The companies winning this transition aren't going 100% open source. They're building hybrid strategies that give them negotiating power, algorithmic control, and cost predictability.

The Bottom Line

The AI vendors selling you "proprietary advantages" are making a bet: that you'll prioritize short-term convenience over long-term control.

But the executives I work with are starting to do the math differently:

What's the cost of vendor lock-in when they double prices?

What's the liability of decisions we can't explain?

What's the opportunity cost of customizations we can't make?

What's the regulatory risk of algorithms we can't audit?

Open source AI isn't perfect. It requires more technical capability. It shifts responsibility in-house. It demands you build expertise instead of renting it.

But it also means you actually understand the systems running your business. You control your costs. You can prove your AI is fair. And when regulations tighten or vendors pivot, you're not scrambling for exits.

The question isn't whether to use AI. The question is whether you'll use AI you can explain, audit, and control—or AI you're just hoping works.

Because when the board, regulators, or customers ask "how does your AI work," the wrong answer is "we trust the vendor."

We respect your privacy. Your information will never be shared.

Expert Advice. Guaranteed Results. No fluff.

Fractional CTO Support | CTO Advisor Pro

20+ Years | $1.2B+ in Value Delivered | 250+ Clients

Transform

contact@ctoadvisorpro.com

© 2025. All rights reserved.

Reston, Virginia, USA